Accelerate MLOps with Backstage and self-service Amazon SageMaker AI templates

Ashish Bhatt, Shashank Hirematt, and Shivanshu Suryakar, Amazon Web Services

Summary

Organizations that use machine learning operations (MLOps) systems face significant challenges in scaling, standardizing, and securing their ML infrastructure. This pattern introduces a transformative approach that combines Backstage

The IaC modules for this pattern are provided in the GitHub AWS AIOps modules

By using Backstage as a self-service platform and integrating preconfigured SageMaker AI templates, you can:

Accelerate time to value for your ML initiatives.

Help enforce consistent security and governance.

Provide data scientists with standardized, compliant environments.

Reduce operational overhead and infrastructure complexity.

This pattern provides a solution that addresses the critical challenges of MLOps and also provides a scalable, repeatable framework that enables innovation while maintaining organizational standards.

Target audience

This pattern is intended for a broad audience involved in ML, cloud architecture, and platform engineering within an organization. This includes:

ML engineers who want to standardize and automate ML workflow deployments.

Data scientists who want self-service access to preconfigured and compliant ML environments.

Platform engineers who are responsible for building and maintaining internal developer platforms and shared infrastructure.

Cloud architects who design scalable, secure, and cost-effective cloud solutions for MLOps.

DevOps engineers who are interested in extending continuous integration and continuous delivery (CI/CD) practices to ML infrastructure provisioning and workflows.

Technical leads and managers who oversee ML initiatives and want to improve team productivity, governance, and time to market.

For more information about MLOps challenges, SageMaker AI MLOps modules, and how the solution provided by this pattern can address the needs of your ML teams, see the Additional information section.

Prerequisites and limitations

Prerequisites

AWS Identity and Access Management (IAM) roles and permissions

for provisioning resources into your AWS account An understanding of Amazon SageMaker Studio, SageMaker Projects, SageMaker Pipelines, and SageMaker Model Registry concepts

An understanding of IaC principles and experience with tools such as the AWS Cloud Development Kit (AWS CDK)

Limitations

Limited template coverage. Currently, the solution supports only SageMaker AI-related AIOps modules from the broader AIOps solution

. Other modules, such as Ray on Amazon Elastic Kubernetes Service (Amazon EKS), MLflow, Apache Airflow, and fine-tuning for Amazon Bedrock, are not yet available as Backstage templates. Non-configurable default settings. Templates use fixed default configurations from the AIOps SageMaker modules with no customization. You cannot modify instance types, storage sizes, networking configurations, or security policies through the Backstage interface, which limits flexibility for specific use cases.

AWS-only support. The platform is designed exclusively for AWS deployments and doesn't support multicloud scenarios. Organizations that use cloud services outside the AWS Cloud cannot use these templates for their ML infrastructure needs.

Manual credential management. You must manually provide your AWS credentials for each deployment. This solution doesn’t provide integration with corporate identity providers, AWS IAM Identity Center, or automated credential rotation.

Limited lifecycle management. The templates lack comprehensive resource lifecycle management features such as automated cleanup policies, cost optimization recommendations, and infrastructure drift detection. You must manually manage and monitor deployed resources after creation.

Architecture

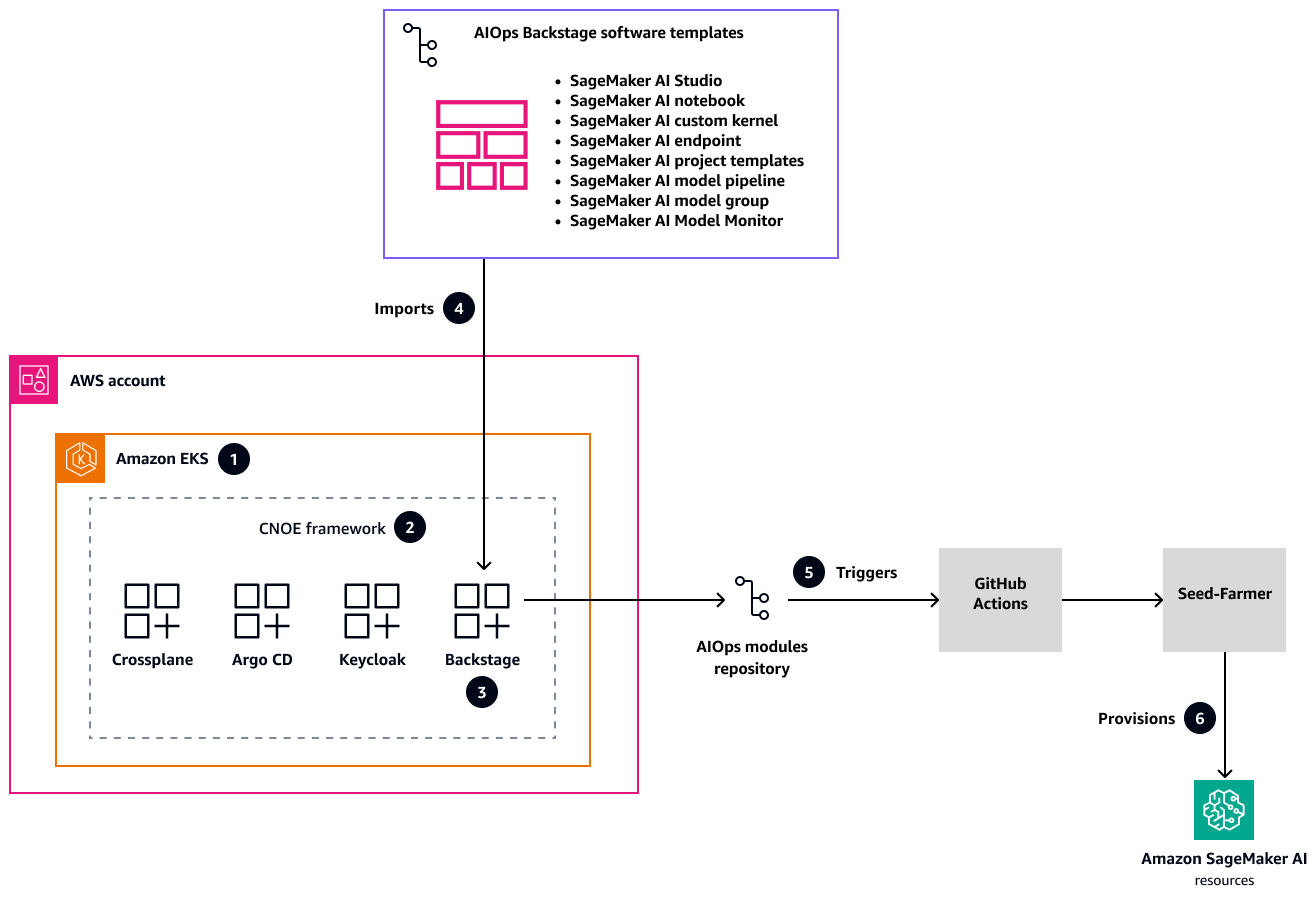

The following diagram shows the solution architecture for a unified developer portal that standardizes and accelerates ML infrastructure deployment with SageMaker AI across environments.

In this architecture:

AWS application modernization blueprints

provision the infrastructure setup with an Amazon EKS cluster as a base for the Cloud Native Operational Excellence (CNOE) framework. This comprehensive solution addresses complex cloud-native infrastructure management challenges by providing a scalable internal developer platform (IDP). The blueprints offer a structured approach to setting up a robust, flexible infrastructure that can adapt to your evolving organizational needs. The CNOE open source framework consolidates DevOps tools and solves ecosystem fragmentation through a unified platform engineering approach. By bringing together disparate tools and technologies, it simplifies the complex landscape of cloud-native development, so your teams can focus on innovation instead of toolchain management. The framework provides a standardized methodology for selecting, integrating, and managing development tools.

With CNOE, Backstage is deployed as an out-of-the-box solution within the Amazon EKS cluster. Backstage is bundled with robust authentication through Keycloak

and comprehensive deployment workflows through Argo CD . This integrated platform creates a centralized environment for managing development processes and provides a single place for teams to access, deploy, and monitor their infrastructure and applications across multiple environments. A GitHub repository contains preconfigured AIOps software templates that cover the entire SageMaker AI lifecycle. These templates address critical ML infrastructure needs, including SageMaker Studio provisioning, model training, inference pipelines, and model monitoring. These templates help you accelerate your ML initiatives and ensure consistency across different projects and teams.

GitHub Actions

implements an automated workflow that dynamically triggers resource provisioning through the Seed-Farmer utility. This approach integrates the Backstage catalog with the AIOps modules repository and creates a streamlined infrastructure deployment process. The automation reduces manual intervention, minimizes human error, and ensures rapid, consistent infrastructure creation across different environments. The AWS CDK helps you define and provision infrastructure as code, and ensures repeatable, secure, and compliant resource deployment across specified AWS accounts. This approach provides maximum governance with minimal manual intervention, so you can create standardized infrastructure templates that can be easily replicated, version-controlled, and audited.

Tools

AWS services

AWS Cloud Development Kit (AWS CDK) is a software development framework that helps you define and provision AWS Cloud infrastructure in code.

Amazon Elastic Kubernetes Service (Amazon EKS) helps you run Kubernetes on AWS without needing to install or maintain your own Kubernetes control plane or nodes.

Amazon SageMaker AI is a managed ML service that helps you build and train ML models and then deploy them into a production-ready hosted environment.

Other tools

Backstage

is an open source framework that helps you build internal developer portals. GitHub Actions

is a CI/CD platform that automates software development workflows, including tasks such as building, testing, and deploying code.

Code repositories

This pattern uses code and templates from the following GitHub repositories:

AIOps internal developer platform (IDP) with Backstage

repository SageMaker AI-related modules from the AWS AIOps modules

repository Modern engineering on AWS

repository

Implementation

This implementation uses a production-grade deployment pattern for Backstage from the Modern engineering on AWS

The Epics section of this pattern outlines the implementation approach. For detailed, step-by-step deployment instructions, see the comprehensive deployment guide

Initial Backstage platform deployment

Integration of SageMaker software templates with Backstage

Consuming and maintaining Backstage templates

The deployment guide also includes guidance for ongoing maintenance, troubleshooting, and platform scaling.

Best practices

Follow these best practices to help ensure security, governance, and operational excellence in your MLOps infrastructure implementations.

Template management

Never make breaking changes to live templates.

Always test updates thoroughly before production deployment.

Maintain clear and well-documented template versions.

Security

Pin GitHub Actions to specific commit secure hash algorithms (SHAs) to help prevent supply chain attacks.

Use least privilege IAM roles with granular permissions.

Store sensitive credentials in GitHub Secrets

and AWS Secrets Manager. Never hardcode credentials in templates.

Governance and tracking

Implement comprehensive resource tagging standards.

Enable precise cost tracking and compliance monitoring.

Maintain clear audit trails for infrastructure changes.

This guide provides a strong foundation for implementing these best practices by using Backstage, SageMaker AI, and IaC modules.

Epics

| Task | Description | Skills required |

|---|---|---|

Deploy Backstage. | This step uses the blueprints in the Modern engineering on AWS The infrastructure uses Amazon EKS as a container orchestration platform for deploying IDP components. The Amazon EKS architecture includes secure networking configurations to establish strict network isolation and control access patterns. The platform integrates with authentication mechanisms to help secure user access across services and environments. | Platform engineer |

Set up your SageMaker AI templates. | This step uses the scripts in the GitHub AIOps internal developer platform (IDP) with Backstage This process creates a repository that contains the SageMaker AI templates that are required for integration with Backstage. | Platform engineer |

Integrate the SageMaker AI templates with Backstage. | Follow the instructions in the SageMaker templates integration This step integrates the AIOps modules (SageMaker AI templates from the last step) into your Backstage deployment so you can self-service your ML infrastructure needs. | Platform engineer |

Use the SageMaker AI templates from Backstage. | Follow the instructions in the Using SageMaker templates In the Backstage portal, you can select from available SageMaker AI templates, including options for SageMaker Studio environments, SageMaker notebooks, custom SageMaker project templates, and model deployment pipelines. After you provide configuration parameters, the platform creates dedicated repositories automatically and provisions AWS resources through GitHub Actions and Seed-Farmer. You can monitor progress through GitHub Actions logs and the Backstage component catalog. | Data scientist, Data engineer, Developer |

| Task | Description | Skills required |

|---|---|---|

Update SageMaker AI templates. | To update a SageMaker AI template in Backstage, follow these steps.

| Platform engineer |

Create and manage multiple versions of a template. | For breaking changes or upgrades, you might want to create multiple versions of a SageMaker AI template.

| Platform engineer |

| Task | Description | Skills required |

|---|---|---|

Expand template coverage beyond SageMaker AI. | The current solution implements only SageMaker AI-related AIOps templates. You can extend the ML environment by adding AIOps modules You can also implement template inheritance patterns to create specialized versions of base templates. This extensibility enables you to manage diverse AWS resources and applications beyond SageMaker AI while preserving the simplified developer experience and maintaining your organization’s standards. | Platform engineer |

Use dynamic parameter injection. | The current templates use default configurations without customization, and run the Seed-Farmer CLI to deploy resources with default variables. You can extend the default configuration by using dynamic parameter injection for module-specific configurations. | Platform engineer |

Enhance security and compliance. | To enhance security in the creation of AWS resources, you can enable role-based access control (RBAC) integration with single sign-on (SSO), SAML, OpenID Connect (OIDC), and policy as code enforcement. | Platform engineer |

Add automated resource cleanup. | You can enable features for automated cleanup policies, and also add infrastructure drift detection and remediation. | Platform engineer |

| Task | Description | Skills required |

|---|---|---|

Remove the Backstage infrastructure and SageMaker AI resources. | When you’ve finished using your ML environment, follow the instructions in the Cleanup and resource management | Platform engineer |

Troubleshooting

| Issue | Solution |

|---|---|

AWS CDK bootstrap failures | Verify AWS credentials and Region configuration. |

Amazon EKS cluster access issues | Check kubectl configuration and IAM permissions. |

Application Load Balancer connectivity issues | Make sure that security groups allow inbound traffic on port 80/443. |

GitHub integration issues | Verify GitHub token permissions and organization access. |

SageMaker AI deployment failures | Check AWS service quotas and IAM permissions. |

Related resources

Platform engineering (in the guide AWS Cloud Adoption Framework: Platform perspective)

Backstage Software Templates

(Backstage website) AIOps modules repository

(collection of reusable IaC modules for ML) AIOps internal developer platform (IDP) with Backstage

repository Modern engineering on AWS

repository

Additional information

Business challenges

Organizations that embark on or scale their MLOps initiatives frequently encounter these business and technical challenges:

Inconsistent environments. The lack of standardized development and deployment environments makes collaboration difficult and increases deployment risks.

Manual provisioning overhead. Manually setting up an ML infrastructure with SageMaker Studio, Amazon Simple Storage Service (Amazon S3) buckets, IAM roles, and CI/CD pipelines is time-consuming and error-prone, and diverts data scientists from their core task of model development.

Lack of discoverability and reuse. The lack of a centralized catalog makes it difficult to find existing ML models, datasets, and pipelines. This leads to redundant work and missed opportunities for reuse.

Complex governance and compliance. Ensuring that ML projects adhere to organizational security policies, data privacy regulations, and compliance standards such as Health Insurance Portability and Accountability Act (HIPAA) and General Data Protection Regulation (GDPR) can be challenging without automated guardrails.

Slow time to value. The cumulative effect of these challenges results in protracted ML project lifecycles and delays the realization of business value from ML investments.

Security risks. Inconsistent configurations and manual processes can introduce security vulnerabilities that make it difficult to enforce least privilege and network isolation.

These issues prolong development cycles, increase operational overhead, and introduce security risks. The iterative nature of ML requires repeatable workflows and efficient collaboration.

Gartner predicts that by 2026, 80% of software engineering organizations will have platform teams. (See Platform Engineering Empowers Developers to be Better, Faster, Happier

MLOps SageMaker modules

The AIOps modules

Using the AIOps modules directly often requires platform teams to deploy and manage these IaC templates, which can present challenges for data scientists who want self-service access. Discovering and understanding the available templates, configuring the necessary parameters, and triggering their deployment might require navigating AWS service consoles or directly interacting with IaC tools. This can create friction, increase cognitive load for data scientists who prefer to focus on ML tasks, and potentially lead to inconsistent parameterization or deviations from organizational standards if these templates aren’t managed through a centralized and user-friendly interface. Integrating these powerful AIOps modules with an IDP such as Backstage helps address these challenges by providing a streamlined, self-service experience, enhanced discoverability, and stronger governance controls for using these standardized MLOps building blocks.

Backstage as IDP

An internal developer platform (IDP) is a self-service layer built by platform teams to simplify and standardize how developers build, deploy, and manage applications. It abstracts infrastructure complexity and provides developers with easy access to tools, environments, and services through a unified interface.

The primary goal of an IDP is to enhance developer experience and productivity by:

Enabling self-service for tasks such as service creation and deployment.

Promoting consistency and compliance through standard templates.

Integrating tools across the development lifecycle (CI/CD, monitoring, and documentation).

Backstage is an open source developer portal that was created by Spotify and is now part of the Cloud Native Computing Foundation (CNCF). It helps organizations build their own IDP by providing a centralized, extensible platform to manage software components, tools, and documentation. With Backstage, developers can:

Discover and manage all internal services through a software catalog.

Create new projects by using predefined templates through the scaffolder plugin.

Access integrated tooling such as CI/CD pipelines, Kubernetes dashboards, and monitoring systems from one location.

Maintain consistent, markdown-based documentation through TechDocs.

FAQ

What's the difference between using this Backstage template versus deploying SageMaker Studio manually through the SageMaker console?

The Backstage template provides several advantages over manual AWS console deployment, including standardized configurations that follow organizational best practices, automated IaC deployment using Seed-Farmer and the AWS CDK, built-in security policies and compliance measures, and integration with your organization's developer workflows through GitHub. The template also creates reproducible deployments with version control, which make it easier to replicate environments across different stages (development, staging, production) and maintain consistency across teams. Additionally, the template includes automated cleanup capabilities and integrates with your organization's identity management system through Backstage. Manual deployment through the console requires deep AWS expertise and doesn’t provide version control or the same level of standardization and governance that the template offers. For these reasons, console deployments are more suitable for one-off experiments than production ML environments.

What is Seed-Farmer and why does this solution use it?

Seed-Farmer is an AWS deployment orchestration tool that manages infrastructure modules by using the AWS CDK. This pattern uses Seed-Farmer because it provides standardized, reusable infrastructure components that are specifically designed for AI/ML workloads, handles complex dependencies between AWS services automatically, and ensures consistent deployments across different environments.

Do I need to install the AWS CLI to use these templates?

No, you don't have to install the AWS CLI on your computer. The templates run entirely through GitHub Actions in the cloud. You provide your AWS credentials (access key, secret key, and session token) through the Backstage interface, and the deployment happens automatically in the GitHub Actions environment.

How long does it take to deploy a SageMaker Studio environment?

A typical SageMaker Studio deployment takes 15-25 minutes to complete. This includes AWS CDK bootstrapping (2-3 minutes), Seed-Farmer toolchain setup (3-5 minutes), and resource creation (10-15 minutes). The exact time depends on your AWS Region and the complexity of your networking setup.

Can I deploy multiple SageMaker environments in the same AWS account?

Yes, you can. Each deployment creates resources with unique names based on the component name you provide in the template. However, be aware of AWS service quotas: Each account can have a limited number of SageMaker domains per Region, so check your quotas before you create multiple environments.