Overview of vectors

Vectors are numerical representations that help machines understand and process data. In generative AI, they serve two key purposes:

-

Representing latent spaces that capture data structure in compressed form

-

Creating embeddings for data like words, sentences, and images

Embedding models like Word2Vec

-

Learn from context to represent words as vectors.

-

Place similar words closer together in vector space.

-

Enable machines to process data in a continuous space.

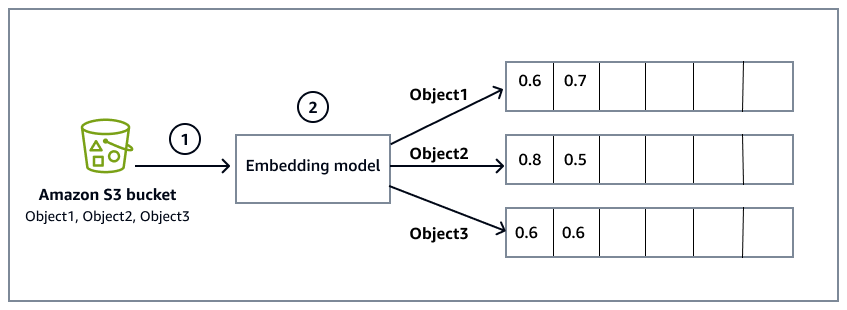

The following diagram provides a high-level overview of the embedding process:

-

An Amazon Simple Storage Service (Amazon S3) bucket contains files that are the data sources from which the system will read and process information. The S3 bucket is specified during the Amazon Bedrock knowledge base configuration, which also includes syncing data with the knowledge base.

-

The embedding model converts the raw data from the object files in the S3 bucket into vector embeddings. For example, Object1 is converted into a vector [0.6, 0.7, ...], representing its content in a multi-dimensional space.

Word embeddings are crucial for natural language processing (NLP) because they do the following:

-

Capture semantic relationships between words.

-

Enable generation of contextually relevant text.

-

Power large language models (LLMs) to produce human-like responses.