Les traductions sont fournies par des outils de traduction automatique. En cas de conflit entre le contenu d'une traduction et celui de la version originale en anglais, la version anglaise prévaudra.

Déployez des systèmes agentic sur Amazon Bedrock avec le framework CrewAI en utilisant Terraform

Vanitha Dontireddy, Amazon Web Services

Récapitulatif

Ce modèle montre comment implémenter des systèmes d'IA multi-agents évolutifs en utilisant le framework CrewAI

Conditions préalables et limitations

Prérequis

Un actif Compte AWS disposant des autorisations appropriées pour accéder aux modèles de la fondation Amazon Bedrock

Python version 3.9 ou ultérieure installée

Limites

Les interactions entre les agents sont limitées par les fenêtres contextuelles du modèle.

Les considérations relatives à la gestion de l'état de Terraform pour les déploiements à grande échelle s'appliquent à ce modèle.

Certains Services AWS ne sont pas disponibles du tout Régions AWS. Pour connaître la disponibilité par région, consultez la section AWS Services par région

. Pour des points de terminaison spécifiques, consultez Points de terminaison de service et quotas, puis choisissez le lien correspondant au service.

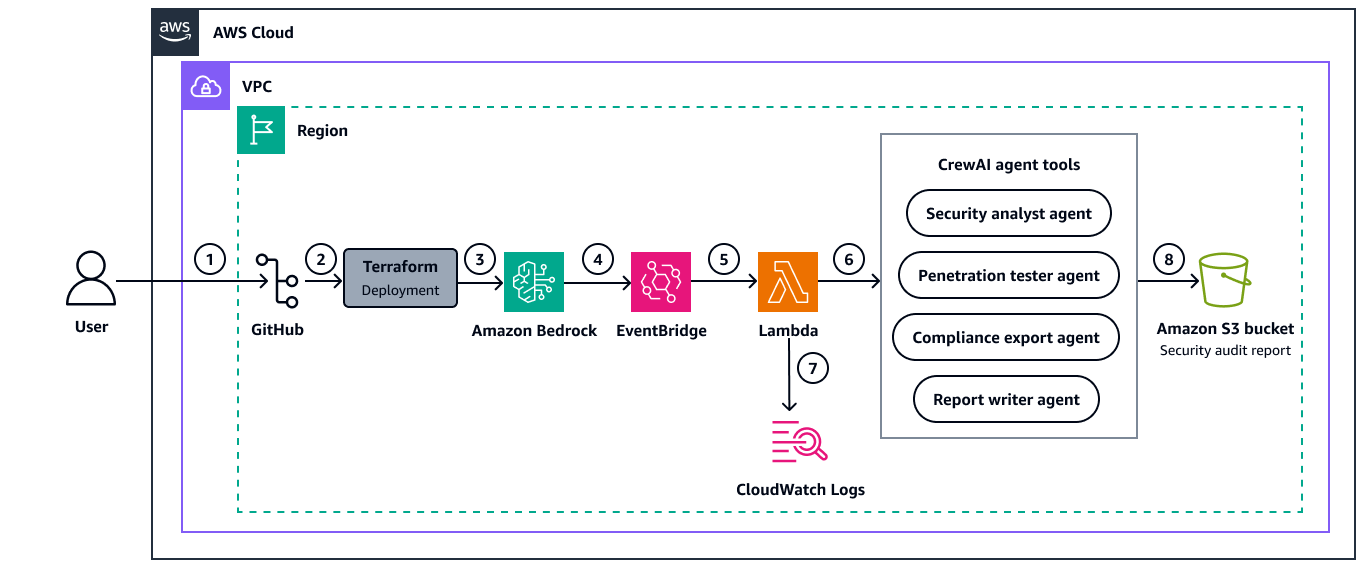

Architecture

Dans ce modèle, les interactions suivantes se produisent :

Amazon Bedrock fournit les bases de l'intelligence des agents grâce à sa suite de modèles de base (FMs). Il permet aux agents d'IA de disposer de capacités de traitement du langage naturel (NLP), de raisonnement et de prise de décision tout en maintenant une disponibilité et une évolutivité élevées.

Le framework CrewAI sert de couche d'orchestration principale pour la création et la gestion des agents d'IA. Il gère les protocoles de communication des agents, la délégation de tâches et la gestion des flux de travail tout en s'intégrant à Amazon Bedrock.

Terraform gère l'ensemble de l'infrastructure par le biais du code, y compris les ressources informatiques, le réseau, les groupes de sécurité et les rôles AWS Identity and Access Management (IAM). Il garantit des déploiements cohérents et contrôlés par version dans tous les environnements. Le déploiement de Terraform crée les éléments suivants :

AWS Lambda fonction pour exécuter l'application CrewAI

Compartiments Amazon Simple Storage Service (Amazon S3) pour le code et les rapports

Rôles IAM dotés des autorisations appropriées

CloudWatch Journalisation Amazon

Exécution planifiée par Amazon EventBridge

Le schéma suivant illustre l'architecture permettant de déployer des systèmes multi-agents CrewAI à l'aide d'Amazon Bedrock et Terraform.

Le schéma suivant illustre le flux de travail suivant :

L'utilisateur clone le référentiel.

L'utilisateur exécute la commande

terraform applypour déployer les AWS ressources.La configuration du modèle Amazon Bedrock inclut la spécification du modèle de base (FM) à utiliser pour configurer les agents CrewAI.

Une EventBridge règle est établie pour déclencher la fonction Lambda selon le calendrier défini.

Lorsqu'elle est déclenchée (par planification ou manuellement), la fonction Lambda initialise et assume le rôle IAM avec des autorisations d'accès et Services AWS Amazon Bedrock.

Le framework CrewAI charge les configurations d'agents à partir de fichiers YAML et crée des agents d'IA spécialisés (l'équipe d'audit de sécurité de l'AWS infrastructure). La fonction Lambda exécute ces agents de manière séquentielle pour scanner les AWS ressources, analyser les failles de sécurité et générer des rapports d'audit complets.

CloudWatch Les journaux capturent des informations d'exécution détaillées à partir de la fonction Lambda avec une période de rétention de 365 jours et un cryptage AWS Key Management Service (AWS KMS) pour répondre aux exigences de conformité. Les journaux fournissent une visibilité sur les activités des agents, le suivi des erreurs et les indicateurs de performance, permettant ainsi une surveillance et un dépannage efficaces du processus d'audit de sécurité.

Le rapport d'audit de sécurité est automatiquement généré et stocké dans le compartiment Amazon S3 désigné. La configuration automatisée permet de maintenir une surveillance cohérente de la sécurité avec une charge opérationnelle minimale.

Après le déploiement initial, le flux de travail fournit des audits de sécurité et des rapports continus pour votre AWS infrastructure sans intervention manuelle.

Présentation des agents d'IA

Ce modèle crée plusieurs agents d'IA, chacun doté de rôles, d'objectifs et d'outils uniques :

L'agent d'analyse de sécurité collecte et analyse les informations sur les AWS ressources.

L'agent de test de pénétration identifie les vulnérabilités des AWS ressources.

L'agent expert en conformité vérifie les configurations par rapport aux normes de conformité.

L'agent rédacteur du rapport compile les résultats dans des rapports complets.

Ces agents collaborent sur une série de tâches, en tirant parti de leurs compétences collectives pour réaliser des audits de sécurité et générer des rapports complets. (Le config/agents.yaml fichier décrit les capacités et les configurations de chaque agent de cette équipe.)

Le traitement de l'analyse de sécurité comprend les actions suivantes :

L'agent Security Analyst examine les données collectées sur les AWS ressources, telles que les suivantes :

Instances et groupes de sécurité Amazon Elastic Compute Cloud (Amazon EC2)

Compartiments et configurations Amazon S3

Rôles, politiques et autorisations IAM

Configurations du cloud privé virtuel (VPC) et paramètres réseau

Bases de données Amazon RDS et paramètres de sécurité

Fonctions et configurations Lambda

Autres objets Services AWS relevant de la portée de l'audit

L'agent de test de pénétration identifie les vulnérabilités potentielles.

Les agents collaborent via le framework CrewAI pour partager leurs résultats.

La génération de rapports comprend les actions suivantes :

L'agent rédacteur du rapport compile les résultats de tous les autres agents.

Les problèmes de sécurité sont organisés en fonction du service, de la gravité et de l'impact sur la conformité.

Des recommandations de correction sont générées pour chaque problème identifié.

Un rapport d'audit de sécurité complet est créé au format Markdown et téléchargé dans le compartiment Amazon S3 désigné. Les rapports historiques sont conservés pour le suivi de la conformité et l'amélioration de la posture de sécurité.

Les activités de journalisation et de surveillance incluent :

CloudWatch les journaux capturent les détails de l'exécution et les éventuelles erreurs.

Les métriques d'exécution Lambda sont enregistrées à des fins de surveillance.

Note

Le code de aws-security-auditor-crew provient du référentiel GitHub 3P-Agentic_Frameworks

Disponibilité et échelle

Vous pouvez étendre le nombre d'agents disponibles à plus que les quatre agents principaux. Pour intégrer des agents spécialisés supplémentaires, envisagez les nouveaux types d'agents suivants :

Un agent spécialisé dans le renseignement sur les menaces peut effectuer les opérations suivantes :

Surveille les flux de menaces externes et établit une corrélation avec les résultats internes

Fournit un contexte sur les menaces émergentes pertinentes pour votre infrastructure

Priorise les vulnérabilités en fonction de leur exploitation active dans la nature

Les agents du cadre de conformité peuvent se concentrer sur des domaines réglementaires spécifiques tels que les suivants :

Agent de conformité à la norme de sécurité des données de l'industrie des cartes de paiement (PCI DSS)

Agent de conformité à la loi de 1996 sur la portabilité et la responsabilité de l'assurance maladie (HIPAA)

Agent de conformité à System and Organization Controls 2 (SOC 2)

Agent de conformité au règlement général sur la protection des données (RGPD)

En élargissant judicieusement le nombre d'agents disponibles, cette solution peut fournir des informations de sécurité plus approfondies et plus spécialisées tout en maintenant l'évolutivité dans de grands environnements. AWS Pour plus d'informations sur l'approche de mise en œuvre, le développement d'outils et les considérations relatives à la mise à l'échelle, voir Informations supplémentaires.

Outils

Services AWS

Amazon Bedrock est un service d'IA entièrement géré qui met à disposition des modèles de base (FMs) très performants via une API unifiée.

Amazon CloudWatch Logs vous aide à centraliser les journaux de tous vos systèmes et applications, Services AWS afin que vous puissiez les surveiller et les archiver en toute sécurité.

Amazon EventBridge est un service de bus d'événements sans serveur qui vous permet de connecter vos applications à des données en temps réel provenant de diverses sources. Par exemple, AWS Lambda des fonctions, des points de terminaison d'appel HTTP utilisant des destinations d'API ou des bus d'événements dans d'autres. Comptes AWS Dans ce modèle, il est utilisé pour planifier et orchestrer les flux de travail des agents.

AWS Identity and Access Management (IAM) vous aide à gérer en toute sécurité l'accès à vos AWS ressources en contrôlant qui est authentifié et autorisé à les utiliser.

AWS Lambda est un service de calcul qui vous aide à exécuter du code sans avoir à allouer ni à gérer des serveurs. Il exécute votre code uniquement lorsque cela est nécessaire et évolue automatiquement, de sorte que vous ne payez que pour le temps de calcul que vous utilisez.

AWS SDK pour Python (Boto3)

est un kit de développement logiciel qui vous aide à intégrer votre application, bibliothèque ou script Python à Services AWS. Amazon Simple Storage Service (Amazon S3) est un service de stockage d'objets basé sur le cloud qui vous permet de stocker, de protéger et de récupérer n'importe quel volume de données. Dans ce modèle, il fournit un stockage d'objets pour les artefacts des agents et la gestion des états.

Autres outils

Référentiel de code

Le code de ce modèle est disponible dans le GitHub deploy-crewai-agents-terraform

Bonnes pratiques

Mettez en œuvre une gestion d'état appropriée pour Terraform en utilisant un backend Amazon S3 verrouillé par Amazon DynamoDB. Pour plus d'informations, consultez les meilleures pratiques du backend dans Meilleures pratiques d'utilisation du fournisseur Terraform AWS .

Utilisez les espaces de travail pour séparer les environnements de développement, de préparation et de production.

Respectez le principe du moindre privilège et accordez les autorisations minimales requises pour effectuer une tâche. Pour plus d'informations, consultez les sections Accorder le moindre privilège et Bonnes pratiques en matière de sécurité dans la documentation IAM.

Activez la journalisation et la surveillance détaillées via CloudWatch les journaux.

Implémentez des mécanismes de nouvelle tentative et de gestion des erreurs pour les opérations des agents.

Épopées

| Tâche | Description | Compétences requises |

|---|---|---|

Pour cloner le référentiel. | Pour cloner le dépôt de ce modèle sur votre machine locale, exécutez la commande suivante :

| DevOps ingénieur |

Modifiez les variables d'environnement. | Pour modifier les variables d'environnement, procédez comme suit :

| DevOps ingénieur |

Créez l'infrastructure. | Pour créer l'infrastructure, exécutez les commandes suivantes :

Examinez attentivement le plan d'exécution. Si les modifications prévues sont acceptables, exécutez la commande suivante :

| DevOps ingénieur |

| Tâche | Description | Compétences requises |

|---|---|---|

Accédez aux agents. | Les agents de l'équipe d'audit et de reporting de la sécurité de l' AWS infrastructure sont déployés en tant que fonction Lambda. Pour accéder aux agents, procédez comme suit :

| DevOps ingénieur |

(Facultatif) Configurez l'exécution manuelle des agents. | Les agents sont configurés pour fonctionner automatiquement selon un calendrier quotidien (minuit UTC). Vous pouvez toutefois les déclencher manuellement en suivant les étapes suivantes :

Pour plus de détails, consultez la section Tester les fonctions Lambda dans la console dans la documentation Lambda. | DevOps ingénieur |

Accédez aux journaux de l'agent pour le débogage. | Les agents CrewAI s'exécutent dans un environnement Lambda avec les autorisations nécessaires pour effectuer des audits de sécurité et stocker des rapports dans Amazon S3. Le résultat est un rapport Markdown qui fournit une analyse complète de la sécurité de votre AWS infrastructure. Pour faciliter le débogage détaillé du comportement de l'agent, procédez comme suit :

| DevOps ingénieur |

Afficher les résultats de l'exécution de l'agent. | Pour afficher les résultats de l'exécution d'un agent, procédez comme suit :

Les rapports sont stockés avec des noms de fichiers basés sur l'horodatage, comme suit : | DevOps ingénieur |

Surveillez l'exécution des agents. | Pour surveiller l'exécution des agents par le biais de CloudWatch journaux, procédez comme suit :

| DevOps ingénieur |

Personnalisez le comportement des agents. | Pour modifier les agents ou leurs tâches, procédez comme suit :

| DevOps ingénieur |

| Tâche | Description | Compétences requises |

|---|---|---|

Supprimez les ressources créées. | Pour supprimer toute l'infrastructure créée par ce modèle, exécutez la commande suivante :

AvertissementLa commande suivante supprimera définitivement toutes les ressources créées par ce modèle. La commande demandera une confirmation avant de supprimer des ressources. Examinez attentivement le plan de destruction. Si les suppressions prévues sont acceptables, exécutez la commande suivante :

| DevOps ingénieur |

Résolution des problèmes

| Problème | Solution |

|---|---|

Comportement des agents | Pour plus d'informations sur ce problème, consultez la section Tester et résoudre les problèmes du comportement des agents dans la documentation Amazon Bedrock. |

Problèmes liés au réseau Lambda | Pour plus d'informations sur ces problèmes, consultez la section Résoudre les problèmes de réseau dans Lambda dans la documentation Lambda. |

Autorisations IAM | Pour plus d'informations sur ces problèmes, consultez la section Résolution des problèmes liés à l'IAM dans la documentation IAM. |

Ressources connexes

AWS Blogs

AWS documentation

Autres ressources

Informations supplémentaires

Cette section contient des informations sur l'approche de mise en œuvre, le développement d'outils et les considérations relatives à la mise à l'échelle liées à la discussion précédente dans Automation et échelle.

Méthode de mise en œuvre

Envisagez l'approche suivante pour ajouter des agents :

Configuration de l'agent :

Ajoutez de nouvelles définitions d'agent au

config/agents.yamlfichier.Définissez des antécédents, des objectifs et des outils spécialisés pour chaque agent.

Configurez les capacités de mémoire et d'analyse en fonction de la spécialité de l'agent.

Orchestration des tâches :

Mettez à jour le

config/tasks.yamlfichier pour inclure les nouvelles tâches spécifiques à l'agent.Créez des dépendances entre les tâches pour garantir un flux d'informations approprié.

Mettez en œuvre l'exécution parallèle des tâches, le cas échéant.

Mise en œuvre technique

Vous trouverez ci-dessous un ajout au agents.yaml fichier pour un agent spécialisé en renseignement sur les menaces proposé :

Example new agent configuration in agents.yaml threat_intelligence_agent: name: "Threat Intelligence Specialist" role: "Cybersecurity Threat Intelligence Analyst" goal: "Correlate AWS security findings with external threat intelligence" backstory: "Expert in threat intelligence with experience in identifying emerging threats and attack patterns relevant to cloud infrastructure." verbose: true allow_delegation: true tools: - "ThreatIntelligenceTool" - "AWSResourceAnalyzer"

Développement d'outils

Avec le framework CrewAI, vous pouvez prendre les mesures suivantes pour améliorer l'efficacité de votre équipe d'audit de sécurité :

Créez des outils personnalisés pour les nouveaux agents.

Intégrez des outils externes APIs pour le renseignement sur les menaces.

Développez des analyseurs spécialisés pour différents. Services AWS

Considérations relatives au dimensionnement

Lorsque vous étendez votre système d'audit et de reporting de sécurité de l' AWS infrastructure pour gérer des environnements plus vastes ou des audits plus complets, tenez compte des facteurs d'échelle suivants :

Ressources informatiques

Augmentez l'allocation de mémoire Lambda pour gérer des agents supplémentaires.

Envisagez de répartir les charges de travail des agents entre plusieurs fonctions Lambda.

Gestion des coûts

Surveillez l'utilisation de l'API Amazon Bedrock à mesure que le nombre d'agents augmente.

Mettez en œuvre l'activation sélective des agents en fonction de l'étendue de l'audit.

Efficacité de la collaboration

Optimisez le partage d'informations entre les agents.

Implémentez des structures d'agents hiérarchiques pour les environnements complexes.

Amélioration de la base de connaissances

Fournir aux agents des bases de connaissances spécialisées dans leurs domaines.

Mettez régulièrement à jour les connaissances des agents avec les nouvelles meilleures pratiques en matière de sécurité.